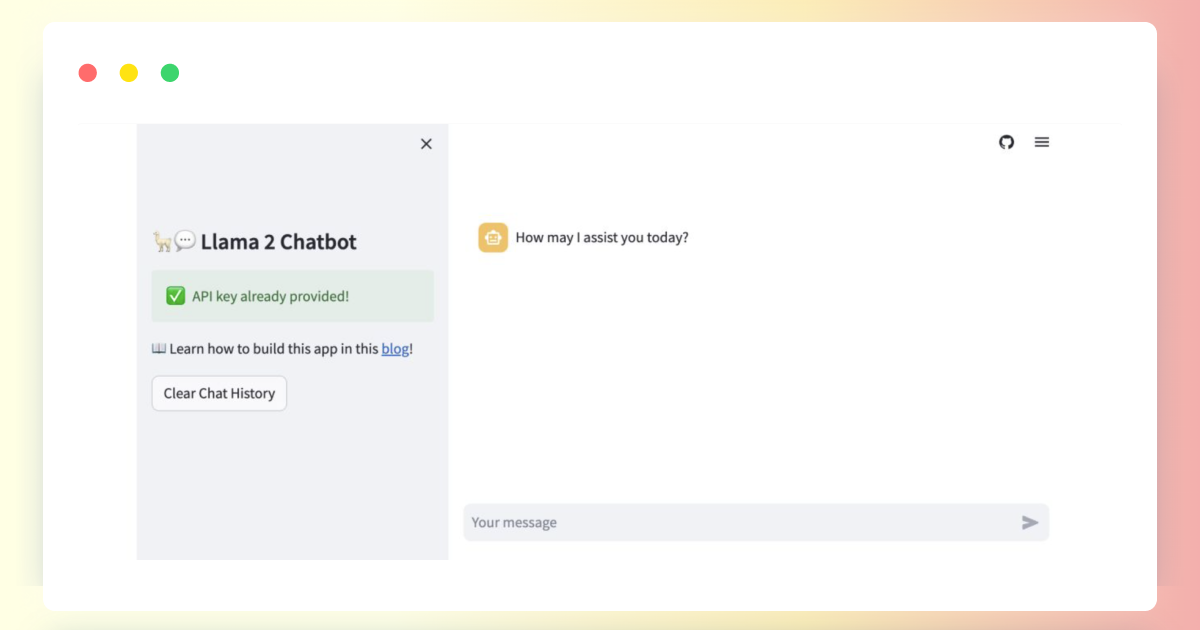

Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. This Space demonstrates model Llama-2-7b-chat by Meta a Llama 2 model with 7B parameters fine-tuned for chat instructions Feel free to play with it or duplicate to run generations without a. Ask any question to two anonymous models eg ChatGPT Claude Llama and vote for the better one You can continue chatting until you identify a winner Vote wont be counted if model. Llama 2 is pretrained using publicly available online data An initial version of Llama Chat is then created through the use of supervised fine-tuning. Across a wide range of helpfulness and safety benchmarks the Llama 2-Chat models perform better than most open models and achieve comparable performance to ChatGPT..

In this article well reveal how to create your very own chatbot using Python and Metas Llama2 model If you want help doing this you can schedule a FREE call with us at. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70. In this video I will show you how to use the newly released Llama-2 by Meta as part of the LocalGPT LocalGPT lets you chat with your own documents. Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. In this video well use the latest Llama 2 13B GPTQ model to chat with multiple PDFs Well use the LangChain library to create a chain that can retrieve relevant documents and answer..

Llama 2 comes in a range of parameter sizes 7B 13B and 70B as. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging. Result Llama 2 models are available in three parameter sizes 7B 13B and 70B and come in. 70 billion parameter model fine-tuned on chat. Result In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large. Result Llama2-70B-Chat is a leading AI model for text completion comparable with. Result More than 48GB VRAM will be needed for 32k context as 16k is the maximum that fits in 2x..

Fine-tune Llama 2 with DPO a guide to using the TRL librarys DPO method to fine tune Llama 2 on a specific dataset Instruction-tune Llama 2 a guide to training Llama 2 to. This blog-post introduces the Direct Preference Optimization DPO method which is now available in the TRL library and shows how one can fine tune the recent Llama v2 7B-parameter. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a. This tutorial will use QLoRA a fine-tuning method that combines quantization and LoRA For more information about what those are and how they work see this post..

Komentar